What is Canary Analysis ?

We discussed the basics of canary deployment In the blog “What is Canary Deployment?“. It is one of the most widely used strategies because it lowers the risk of moving updates into production, while simultaneously reducing the need for additional infrastructure.

Today, many applications run in a dynamic environment of microservices, which makes the software integration and delivery process complex. The only way to successfully deploy updates consistently is to use solid automation with tools like Spinnaker.

What is Canary analysis?

Canary analysis is a two-step process where we will assess the canary based on a selected list of metrics and logs to infer if we are going to promote or roll back the new version. So we need to be sure that we are gathering the right information (metrics and logs) during the test process and conducting a solid analysis. (From here forward in this blog we will refer to metric and logs simply as metrics)

The Two Steps to Perform Canary Analysis

- Metric selection and evaluation :

This step involves selecting the right metrics for monitoring the application and canary health. We need to be sure to create a balanced set of metrics to assess the canary. Lastly if necessary the metrics need to be group based on correlation with each other. Luckily, we perform this step just once for each pipeline. - Selection and evaluation of metrics :

The monitoring systems in microservice-based applications typically generate mountains of data, so we can’t simply analyze all metrics. Selecting all of the metrics simply isn’t required. Once we have selected the high-impact metrics, adding any more metrics will just increase our effort. But which ones to choose? Read on!

Selection and Evaluation Criteria

- Most important business metrics

The most important metrics are the typical ones that are most important to the purpose of the application. For example, in an online shopping checkout application, be sure to measure the number of transactions per minute, the failure rate, and so on. If any of these metrics are outside the baseline, you will probably decide to roll back the canary. Most of these metrics are probably already monitored in staging – be sure to also check them in the canary process as well. - Balanced set of fast and slow metrics

A single metric is not sufficient for a meaningful canary process. Some of the important metrics to monitor are generated instantaneously and some take time to generate as they have a dependency on load, network traffic, high memory usage, or other factors. It is important to balance the metrics you choose between fast and slow metrics. For example, a server query time and latency checks may be examples of slow and fast metrics respectively. Choose a balanced set of metrics that will provide you a strong overall view of the health of the canary. - Metric smoke test

From the balanced pool of metrics, we have to make sure that we have metrics that directly indicate a problem in the canary. Though our goal is finding the health of a canary, it is equally important to find if there is an underlying problem in the canary with such metrics. For example, a response of 404 or another unexpected HTTP return code (200s, 300s) might mean that your test should be stopped instantly and be debugged. CPU usage, memory footprint, HTTP return codes (200s, 300s, etc.), latency of response, correctness, are a good set of metrics but the HTTP return codes and latency of response indicate to an actual problem that impacts users and services. This is sometimes referred to as a metric smoke test. For the smoke test, metrics for point-in-time usage (for example, point in time CPU usage or network bandwidth) aren’t as useful as a change in those metrics, and they could just add noise to our analysis. - Metric standard ranging

These metric selections need to have an acceptable range of play and we should not be too strict or too lenient. With an agreed acceptable behavior of a metric, we will be able to eliminate bad canaries that were incorrectly assessed as good ones. Choosing these ranges is a difficult task that is usually completed incrementally over time. When in doubt, err on the side of being conservative – in other words, choose a small range of acceptable values. We would not want a canary to be deployed in production that was assessed correctly but was actually problematic. - Metric correlation

With a balanced set of metrics we now have our basic tool kit ready for canary analysis. Most of these metrics will be interrelated and finding the correlation and then grouping them is very crucial. We will need to group the metrics in such a way that these groups are isolated. For example a dramatic increase in CPU usage of the system as a whole would make for a poor metric because other processes such as DB and batch queries might be causing that increase. A better metric would be CPU time spent per process served.

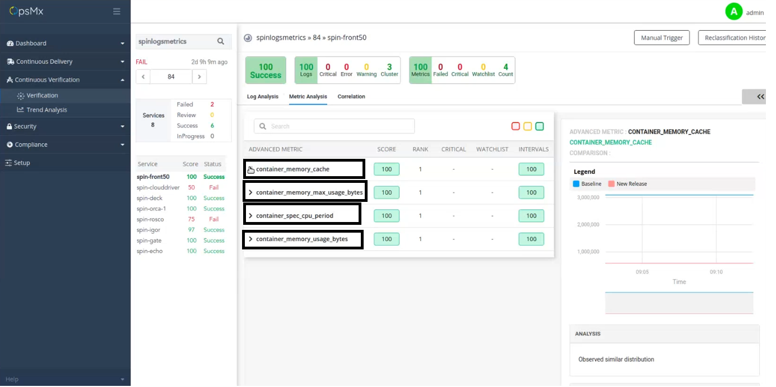

A screenshot of OpsMx Autopilot running a canary analysis with a set of 4 metric clusters.

Canary Hypothesis Testing

We need to perform the last step of canary analysis evaluating the values of the metrics. By doing this we will be able to assess if the canary instance should be promoted to production or not.

Before every hypothesis, we will define the two hypotheses and based on our data we will prove one wrong.

H0 : The canary is bad: Rollback

H1 : The canary is good: Roll forward

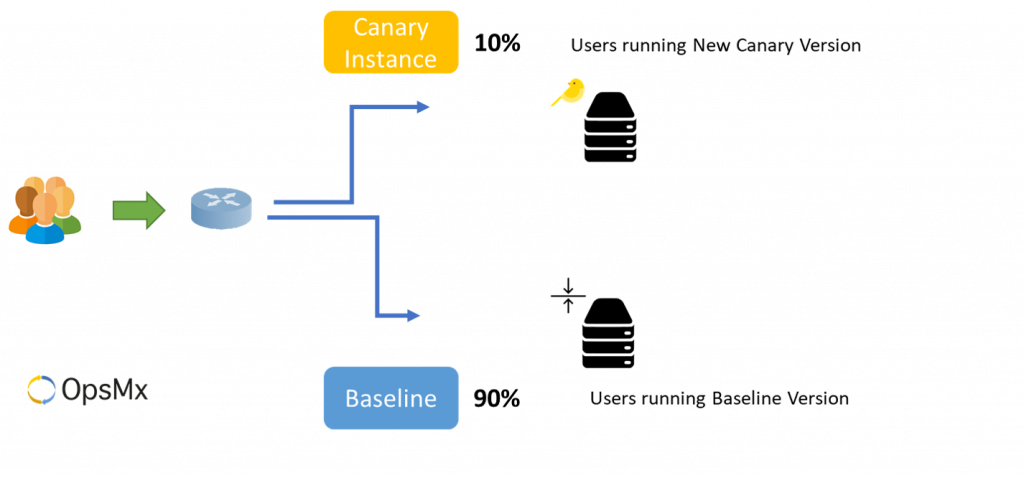

Canary Hypothesis Testing with 10% of Baseline

A simple analysis might start by comparing the canary results with the larger baseline version. However, this evaluation typically leads to incorrect decisions.

A Canary at 10% of the traffic may not behave abnormally but there is no way to be confident that it will behave the same at 30%(or 60% or 90%) of the traffic. This may be because of the following reasons.

- A canary is just released and is only minutes into the production, whereas the metrics from the baseline version are probably weeks or months old. Comparing them will be misleading.

- We have channeled a small section of the traffic to the Canary. The pressure on the systems is probably out of proportion.

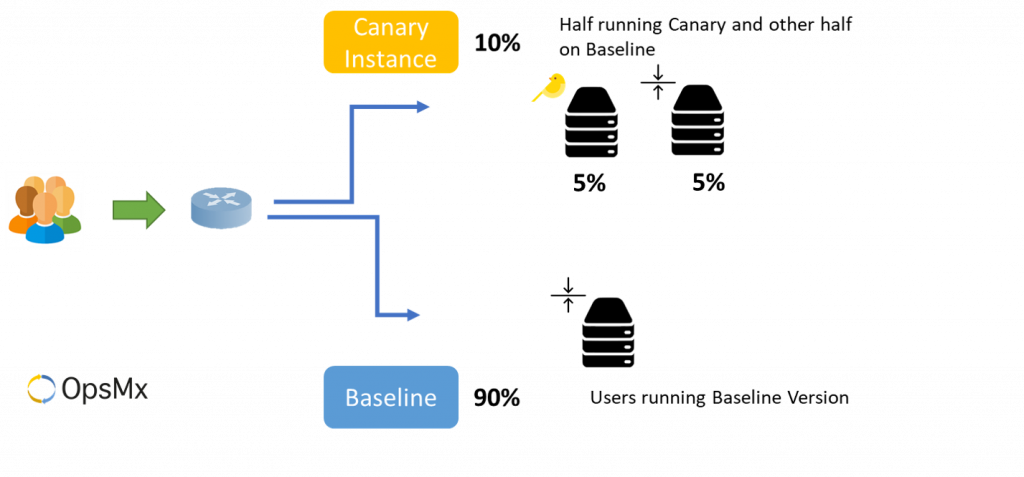

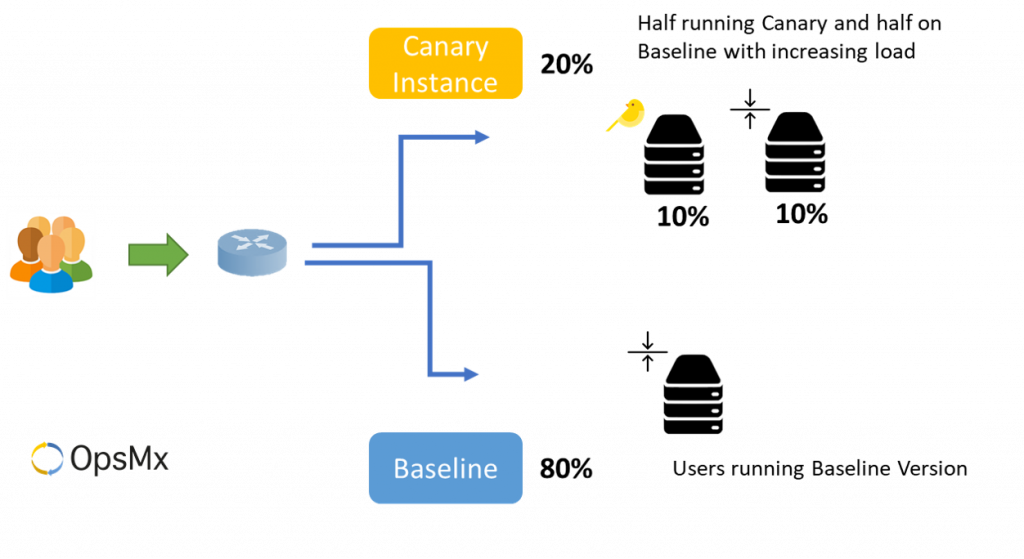

This issue is resolved with the use of A|B Testing strategy. Instead of comparing the canary with a baseline from production, we split the canary infrastructure in two pieces, and deploy the baseline system into one half and the new canary the other half. This will allow for a level playing field where we can accurately gauge if the new canary compares favorably to the baseline system. Because the instance is small, the cost of a separate A|B Test inside a canary deployment will be minimal.

The analysis of the canary is performed by comparing percentage differences or quantile differences between the canary and the baseline instance. A further Wilcoxon rank-sum test is performed to check whether the two instances in question will behave similarly. Based on the similarity score we will decide the next step. If our numbers are favorable, the traffic will be increased to 10% and the analysis will be repeated again. This process is to continuously increase traffic until we are confident that the canary has generated enough data under “real world” conditions to decide whether to promote or rollback.

The Conclusion

As software deployments move at scale and velocity it becomes highly crucial to have a proper system in place that assess these releases before they damage the production environment leading to losses for an organization and irate customers. canary deployments provide an excellent approach to reducing the risk of introducing a defect into production, are relatively low cost, and do not slow the process much as long as appropriate automation is involved. One way to increase the speed of canary analysis is to use OpsMx Autopilot to automate the verification process. For detailed information on Autopilot, check out the Product Page.

An enterprise must evaluate a holistic continuous delivery solution that can automate or facilitate automation of these stages, described above. If you are in the process of automating your CI/CD pipelines, OpsMx Enterprise for Spinnaker can help.

If you are well versed with Spinnaker do check out “OpsMx Enterprise for Spinnaker Cloud Trial”

Or you can always request for a demo:

Reference: Canary Releases from SRE Book by Google