Unshackle Developers from Kubernetes Deployment Complexities and Gain Speed

“In the world of Kung Fu, Speed defines the Winner” – This adage, from the movie KungFu Hustle, holds true for enterprise success in general. Today the quest of achieving speed- in developing software and in the shipment of the software to end customers- drives these organizations nuts. Hence organizations want to embrace Continuous Deployment, a strategy to implement the new (software) releases automatically and safely into production environments. One of the areas, most critical to advanced enterprises, is an application deployment process into containers. Today the rate of software deployments into containers has not stepped up proportionally to the rate of building them. Usually, the carefulness in dealing with verbose configuration of container orchestrator snatches away the speed to deliver software at an expected time. Case in point: In this article, we will put a special focus on how Spinnaker, a Continuous Delivery software, can automate deployments into Kubernetes containers safely.

1. Why does everyone love Kubernetes (K8S)?

Developers, who are apathetic about infrastructure, use Kubernetes- an open-source orchestration system for container deployment, container (de)scaling, and container load balancing. Compelling features which make K8S used profusely by many enterprises (esp. engaged in developing cloud applications) are:

-

- Automated Binpacking: optimally places containers automatically based on the required resources

- Automated Scaling: launches new containers in the run-time, based on resource requirements

- Self-healing: automatically restarts containers or create new containers in case of failures

- Roll Out: rolls out changes to its application or configuration

- Service discovery and load balancing: Tags its own IP to containers and provides a single DNS name for a set of containers and also load-balances the traffic

2. Why the deployment process into K8S may not be as lovely?

Typical steps which are involved in manually deploying a simple application into K8S containers are:

- Pull images from a public registry like DockerHub or Google Container Registry for deployment. And these deployments have to be done for the dev, stage, and production environments, at least.

- Use KubeCtl to create, verify, details about deployment.

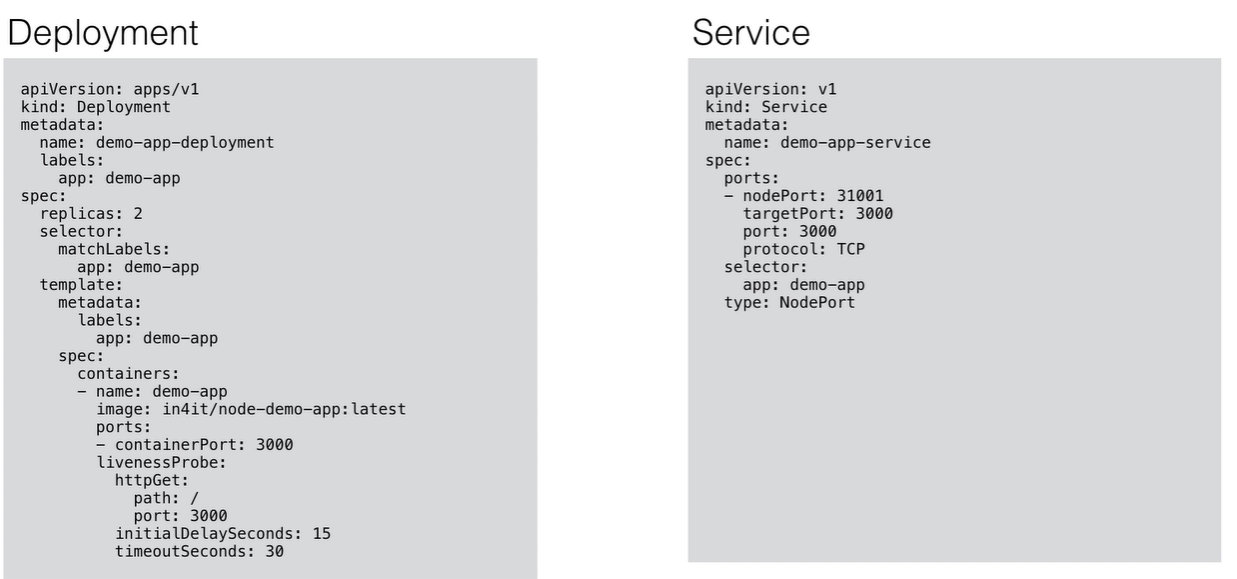

- For e.g. in K8S v2, one has to configure manifest files for deployment and service of new applications. (Refer Fig A)

- Also, there are complexities involved to query the status of the deployment successfully.

- The deployment process of complex microservice-based applications is usually lengthy. Any complex tests such as canary will only compound the deployment time because of additional scripting and lack of industry best practices.(Bells Ringing!!)

Fig A:

Developers are expected to be proficient in specifying various configurations like API version, kind, metadata, name, replicas, and istio enabled apps, etc.. in the manifest file for deployment.

2.1 Can the verbose deployment be fast and safe?

Not only is manual deployment exhaustive and lengthy but also repetitive in nature (deployment in different environments- dev/test/prod). So developers with plans of releasing software rapidly, end up burning their bandwidth on understanding and working on long deployment processes in K8S clusters. Quite often tests are not conducted to understand system health after new deployments are done. Thus, the behavior of spray-and-pray increases the risk of deployment failures. Additionally, a small human error during the process can make a deployment a more eventful and attention-consuming ceremony. Hence, the question of fast and safe still remains unanswered through manual and repetitive deployments with declarative specifications, to K8S.

3. Is there a solution to gain deployment speed while maintaining safety?

Developing new features of release at scale mandates to grow an ability to release software changes at high speed while ensuring deployments rolled out to production are safe. Spinnaker brings good news by providing the ability of:

A. Making deployment Faster:

-

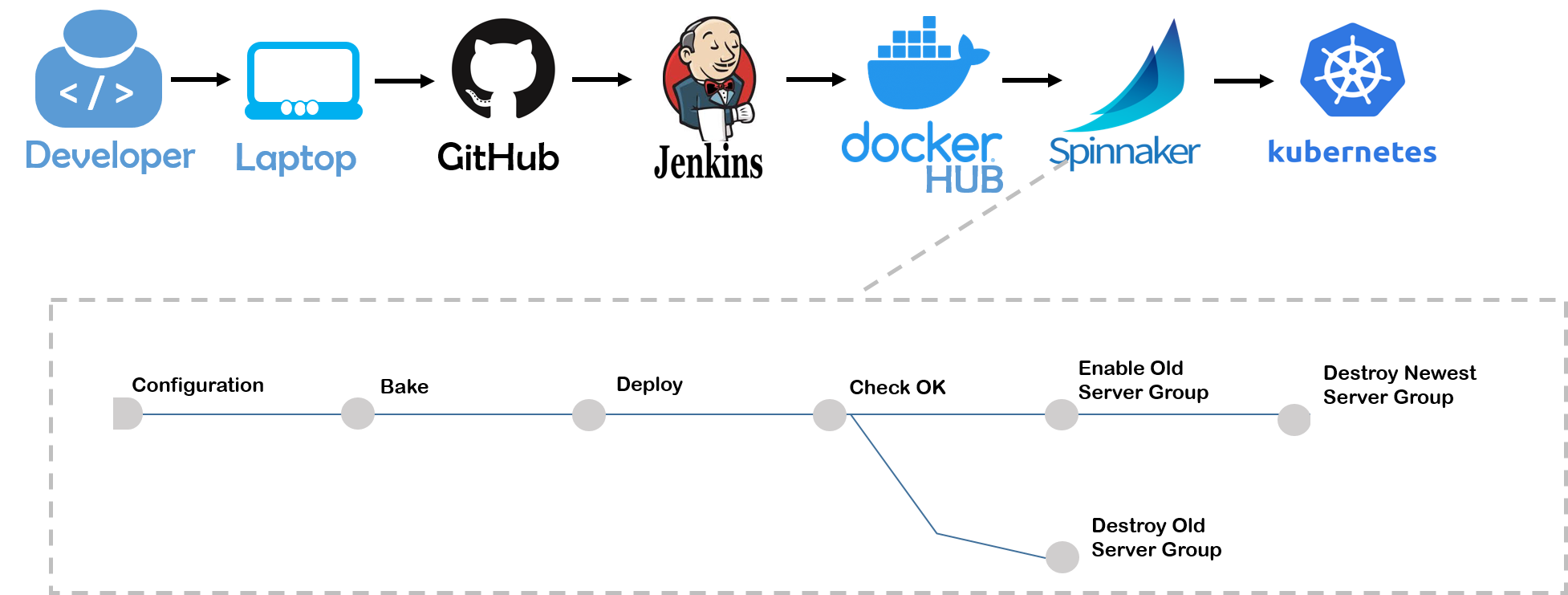

- Spinnaker offers opinionated pipelines for automatic deployments (refer Fig B). The workflow constructs for repetitive deployments and has the capabilities of- baking Amazon Machine Images (AMI) or Docker Images at will, finding k8S containers from clusters and deploy, and modifying cluster group and running a container in K8S.

- It offers various deployment strategies like Red/black, Rolling Red/black or HighLander. From an underlying implementation perspective, these deployment strategies also interact with K8S Pod autoscalers to ensure that capacity dimensions are maintained during deployment. Besides, It also provides versioning of configmaps and secrets along with immutable server deployments. This allows rollback to preserve exact config used along with binaries of execution

- It uses Artificial intelligence on Logs/APM/Metric to detect issues of newly deployed applications, and in case of a positive, it rollbacks the new application. But before that, it ensures that the previous server group is properly sized and encounters 100% traffic before disabling and deleting the recently created server.

- Spinnaker can integrate with CI tools- Jenkins/Travis; Repositories- Git, Docker Hub, AWS ECR; and providers such as AWS, Azure, K8S, GCP

B. Making deployment Safe

-

- It offers the ability to control the deployment date and time. There can be certain peak traffic times that should be averted from deploying code with a risk of downtime. Deployment windows allow pipelines to ensure deployments into K8S happen outside of these peak traffic time zones, not impacting Customer experience (Cx).

- Automated Canary Analysis (ACA), a technique in Spinnaker, to minimize the risk of deploying a new version into the K8S production server by comparing metrics emitted from a small amount of traffic to the new version with the old replaceable version.

- It offers notifications that allow pipelines to quickly alert users when errors occur. Spinnaker allows users to configure pipelines such that stakeholders can be notified by emails, mobile messages, or Slack messages at all levels.

- For maintaining security, Spinnaker offers integration with different authentication protocols such as LDAP and SAML, and Google groups, SAML roles, GitHub Teams, etc. for authorizations.

Fig B: The above pipeline represents how Spinnaker can complement Kubernetes to provide a robust CI/CD value chain:

The above pipeline represents how Spinnaker can complement Kubernetes to provide a robust CI/CD value chain:

Value realized with Continuous Deployment to Kubernetes with OpsMx Enterprise for Spinnaker :

Benefits 1. Decrease time to onboard and deploy new software by a developer in a few mins 2. Increase productivity of developer by at least 50% 3. Decrease delivery duress and increase production handover reliability by at least 70% Values 1. Increased competitiveness of organization 2. Near-zero interruption in service delivery 3. The enhanced Customer experience (Cx)

Conclusion

There are many ways to deploy legacy applications or microservices to Kubernetes containers, and all these ways may not be justifiable if an enterprise has a strong mission of embracing speed in their service delivery. Automated deployment through Spinnaker is the way to empower developers, who are in thrall to deployment activities, to be focused and produce features at a speed which is crucial for enterprises to stay as the winners on their turf.