The Top Challenges of Implementing Continuous Delivery with Kubernetes

Kubernetes is the leading container orchestration system, and it has a vast ecosystem of open source and commercial components built around it. Today, in the wake of the pandemic, even more enterprises are considering Kubernetes a central part of their IT transformation journey. Kubernetes is a great container management tool because it offers:

- Automated bin packing,

- Scaling & self-healing containers,

- Service discovery, and

- Load balancing.

However, using Kubernetes alone may not solve the purpose of becoming agile. Because it was never meant to be a deployment system. This blog will highlight some of the challenges while using Kubernetes, and, based on our work with hundreds of organizations, how to avoid the challenges to unlock the full potential of cloud-native.

Challenges in Deployments while Adopting Kubernetes

Deployment Complexities and Use of Scripts

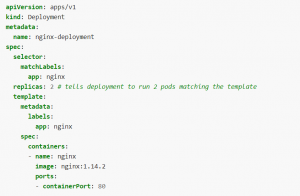

Deploying an application into Kubernetes is not straightforward as it involves a lot of manual scripts. For instance, an engineer has to create a Kubernetes Deployment manifest in YAML or JSON format(shown below) file and write kubectl commands to deploy an application:

nginx deployment

While this may look easy for (some experts) in a single deployment, it becomes an arduous task when the aim is to perform multiple deployments into dev/QA/Prod per day. It also requires substantial familiarity with Kubernetes, which not all members of the team will have. More often than not, organizations end up using scripts and kubectl commands that slow deployment.

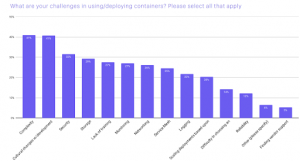

A recent survey of 1500 respondents by CNCF reports that complexity and cultural change with respect to using and deploying containers remain top challenges for Kubernetes adoption.

CNCF Survey on challenges w.r.t. using and deploying containers

Overdependent on experts and burnouts

Lack of knowledge and expertise on Kubernetes makes developers and application teams heavily dependent on the DevOps team (also referred to as the release team) to continuously help them create K8S objects such as Deployments, Replicasets, StatefulSets, and DaemonSets. The process of follow-up, collaboration with various groups to get the changes deployed takes away a lot of time from all stakeholders. Furthermore, with shorter deadlines and immense pressure to meet business objectives, the team has to spend long hours to deploy their changes.

As per a report by D2IQ almost all organizations (96%) face challenges and complexities during initial deployments of containerized applications ( called Day 2 Operations in DevOps language), and cite Kubernetes as a source of pain. The report also shares, “51% of developers and architects say building cloud-native applications makes them want to find a new job”.

This is extremely stressful for senior IT leaders who are responsible for deploying containerized applications for their organizations.

Dealing with security challenges

Kubernetes is not meant to enforce policies, for example finding vulnerabilities in the images. So if you use Kubernetes for deployments, you need to find a different way – typically manual policy enforcement or with some scripting.

For example, based on the default network policy, Kubernetes pods can communicate with each other and external endpoints to operate seamlessly. Due to application or infrastructure security issues, if one container or pod is breached, all others can be attacked (also known as complex attack vectors).

With organizations giving priority to speed in software delivery, security and compliance sometimes are relegated to just an afterthought. Typically, during the adoption of Kubernetes, organizations must try to integrate security and compliance in their build, test, deploy, and production stages.

Deployment strategies and post-deployment health checks:

One common purpose of using Kubernetes-based applications is to scale to large user bases on demand. In production environments, you can observe many nodes, hundreds of pods, and thousands of containers running multiple application instances.

One way to introduce a new change to the live audience is through gradual deployment, i.e., strategies such as blue/green or canary. In this way, we can avoid the risk of releasing an unstable version to the end-customer.

However, blue/green and canary are absent in Kubernetes. On top of that, because of the distributed nature of containerized applications, it is cumbersome and complex to fetch and send updates on a newly deployed Kubernetes app’s health status and estimate its vulnerabilities and risk to the organization.

Kubernetes Deployment Best Practices with Spinnaker

Spinnaker is a multi-cloud continuous delivery platform for releasing code fast and staying ahead of the competition. Spinnaker treats Kubernetes applications as first-class citizens and helps the IT team deploy the application into any Kubernetes ( K8S, GKE, EKS, AKS) rapidly. Following are some important Spinnaker capabilities.

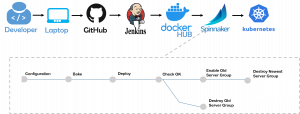

Spinnaker pipeline for end-to-end deploy automation

Spinnaker offers end-to-end pipelines for automatic deployments (refer to the figure below). The workflow constructs for consistent and repetitive deployment and can bake Amazon Machine Images (AMI) or Docker Images at will, finding k8S containers from clusters and deploying, modifying cluster groups, and running a container in K8S.

The above pipeline represents how Spinnaker can complement Kubernetes to provide a robust CI/CD value chain:

Post deployments, Spinnaker checks and displays the health of Kubernetes clusters in real-time. Deployment stages in the pipeline can be configured to notify stakeholders by email, mobile messages, or Slack messages at all levels.

Read more on Deploying into Kubernetes using Spinnaker CD pipeline,and Continuous Deployment to Kubernetes using Github triggered Spinnaker pipelines

Inbuilt Deployment Strategies

Spinnaker offers various deployment strategies like blue/green, rolling blue/green or HighLander, and canary to reduce the risk of deploying into production. Spinnaker also interacts with K8S pod auto-scalers to ensure that capacity dimensions are maintained during deployment. Read more about blue/green strategy for application deployment using Spinnaker pipelines.

Automated Canary Analysis (ACA), a Spinnaker technique, can be leveraged to minimize the risk of deploying a new update into the K8S production server by comparing metrics and logs emitted from the old version to the metrics and logs created by a small deployment of the newer version.

Besides that, Spinnaker also provides versioning of configmaps and secrets along with immutable server deployments. This allows rollback to preserve the exact config used previously along with binaries of execution.

Continuous Verification of New Artifacts

OpsMx Enterprise Spinnaker uses AI/ML on logs and metrics to detect issues of newly deployed Kubernetes applications, and in case of an anomaly, rollback the new application. But before that, it ensures that the previous server group is adequately sized and encounters 100% traffic before disabling and deleting the recently created server.

Read more on enabling canary analysis with Kayenta and Spinnaker.

Enforcing Security and Compliance into Kubernetes deployment

Ensuring security in your Kubernetes usage requires infusing security and compliance gates throughout the software development lifecycle — from coding to build up to deployment. For example, OpsMx Enterprise for Spinnaker(OES) allows the use of a security gate in the build stage to fail a deployment if it fails a smoke test. Similarly, security gates can be installed to check if a container image has passed an image scanning report.

Spinnaker offers production-grade secret management through integration with the vault to store passwords and tokens. Spinnaker also provides integration with various authentication protocols such as LDAP and SAML and authorization tools such as Google groups, SAML roles, GitHub Teams, etc., for maintaining security throughout the software delivery process.

OpsMx Enterprise for Spinnaker offers the ability to write policies to adhere to organizational guidelines and industry standards such as PCI-DSS, HIPAA, and SOC 2. For example, release managers can define deployment date and time as a part of a Blackout-window policy. There can be certain peak traffic times that should be averted from deploying code with a downtime risk. Deployment windows allow pipelines to ensure deployments into K8S happen outside of these peak traffic time zones and not impact the customer experience. Read more on how to make CI/CD pipeline compliant and auditable using OpsMx Enterprise for Spinnaker.

GitOps Style Deployments

OpsMx Enterprise for Spinnaker(OES) allows organizations to perform GitOps style deployments into Kubernetes clusters. Teams familiar with YAML can make changes to the file, and OES can be configured to detect those changes and make deployment autonomously into chosen environments.

Final Thoughts

The path to achieving business agility is undeniably through adopting cloud-native applications, and Kubernetes plays a central role. Organizations that choose to combine Kubernetes and Spinnaker are more likely to see positive results faster than working with Kubernetes alone.

If you want to know more about Spinnaker or request a demonstration, please book a meeting with us.

OpsMx is a leading provider of Continuous Delivery solutions that help enterprises safely deliver software at scale and without any human intervention. We help engineering teams take the risk and manual effort out of releasing innovations at the speed of modern business. For additional information, contact us