Speeding up CI/CD with Machine Learning

“We implemented a modern CI & CD, but our process is Still slow and costly.”

Says the CIO of a large enterprise (Fortune 100), during an interview. The CIO leads a team of about 10,000 thousand engineers responsible for delivering 1000+ applications and doing 400,000 deployments a year came up with the statement as quoted above.

We examined their delivery ecosystem and tried to understand the root cause of slow delivery in their CI/CD process. In this blog, we shall describe and analyze the problems faced by similar customers while trying to speed up risk assessments and the approvals in their CI/CD process. Further, we shall also discuss how these customers can resolve this problem with a continuous verification platform.

Here is a video on the same topic:

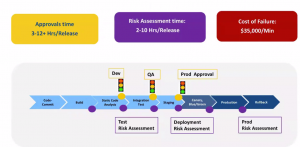

Approvals & Risk assessment are slow & expensive

If we look at the delivery pipeline of the customer, we will see that the approval of a release in dev, QA, and production typically takes around 3-12 hours. Before the final approvals in each stage, the risk assessment takes around 2-10 hours per release. Depending on the pipeline stage for which you are assessing risk, risk assessment can be categorized into the following types:

Risk Assessment Types

- Deployment Risk Assessment – During your deployments, you choose one of the various deployment strategies available like Canary/ Blue-Green or Rolling update. And based on the output of the strategies, you determine whether to continue deployment or rollback.

- Test Risk Assessment – At the test stage, you need to look at the results after running automated test cases and scenarios, and determine if the application artifacts can go to the next stage.

- Prod Risk Assessment – While you are finally deploying to the live/Prod environments, you need to determine if the quality of the deployed release is good or not. If there is a problem then you will need either react to the anomalies or rollback the release.

If approvals of the Risk Assessment are not done right, the cost of production-failure is roughly about $35,000 per minute for the customer and the cost of compliance violations is very high too.

Approvals & Risk assessment are slow & expensive

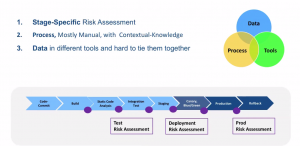

Why Risk Assessment is hard and time-consuming?

Risk Assessment is hard because of three reasons::

- Stage-specific Risk Assessment

Risk Assessment done at the deployment stage is different from that at the test stage and is also very specific to the application. At the test stage, we need to look if any critical test-case fails and make a decision to progress the release to the next stage. And during deployment, we need to look at logs, metrics, and events either from our Canary or Blue-Green deployment strategy to assess if the release is good to go for production. In production, we try to figure out if there is a problem for which we need to do a rollback.

- Requirement of contextual-knowledge

Risk Assessment processes are mostly manual and a huge amount of contextual/domain knowledge is required e.g. One needs to know that for a specific application which specific log error is critical whereas other log-errors are not. So there is a dependency on domain experts, and the risk assessment process gets slower when they are occupied in other core activities or are absent on a vacation.

- Absence of a single source of truth

The data required to make the Risk Assessment are dispersed across different tools and it is difficult to locate and aggregate them in one place. For an instance, test-case results and logs can be found from automated testing tools like Jmeter. Similarly to analyze logs and metrics, an SRE has to download it from APM tools ( like AppDynamics, Dynatrace, Prometheus) and log analyzers (Splunk, SummoLogic). And this process of data aggregation manually from various tools and technologies involves collaboration and is often time-consuming.

Why Risk Assessment is hard?

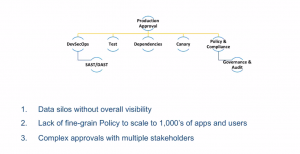

Why are approvals slow & expensive?

The following reasons are responsible for making the approval process slow and expensive:

- Data silos without overall visibility

- Lack of fine-grain Policy to scale to 1000’s apps and users

- Complex approvals with multiple stakeholders

Once a Risk Assessment is done we need to rollup the risk assessment data from different stages to do the approval based on DevSecOps data from SAST and DAST tools, Test data from testing tools, app version dependencies, Canary analysis data, and Policy & Compliance checks and so on. All these data are spread across several places and are in silos. So the approver lacks the overall visibility.

Why are approvals slow and expensive?

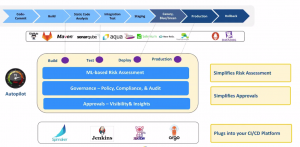

Autopilot: Data-Driven Automation of CI/CD Decisions

OpsMx Autopilot, a continuous verification (CV) platform, that can verify software releases at every stage of software delivery. The continuous verification platform is also an integral part of our OpsMx Enterprise for Spinnaker and works with all CI and CD tools. Autopilot offers machine learning and context built over SDLC to improve the quality of delivery. Enterprise can use the verification platform to perform risk assessment at all CI/CD stages- Build, Test, Deploy, and Prod stage.

Autopilot provides the following features :

- Simplifies risks through ML-based risk assessment

- Simplifies approvals by applying policy and compliance checks

- Provides insights at your fingertips

- Plugs into your CI/CD platform.

Autpilot provides the solution to the problems with the slow and expensive risk assessment & approval process. It collates all data from various deployment stages from the various stages – Build, Test, Deploy, and Production, and applies ML to calculate the risk of a release. After the risk assessment is done, it applies fine-grained policy checks for compliance and audit across all the stages. Finally, it provides the overall visibility to the approver to make decisions manually or in an automated manner. Autopilot has a high level of interoperability and their pluggable architecture allows them to work with several other CI/CD platforms like Spinnaker, Jenkins, Tekton, Argo CD, and so on.

Autopilot- Data-Driven Automation of CI/CD Decisions

Customer Case Stories

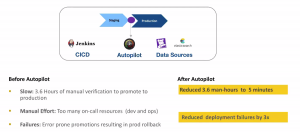

Case1: Deployment Risk Assessment Case Study

In this situation, the customer had been facing three difficulties:

- Usually, 3.6 hours were spent while doing manual verification ( To check deployment logs & metrics across multiple tools & services) to promote into the production.

- Too many on-call resources (dev and ops team engineers) involved in the release process.

- While releasing fast they had error-prone releases that resulted in prod rollbacks.

Solution:

Autopilot is now being used in the staging environment to observe every dependent service during deployment and analyze logs, metrics, and events. The customer has Jenkins as their CI/CD platform, and Datadog & Elasticsearch for monitoring. With Autopilot they are able to reduce the verification time from 3.5 hrs to 5 minutes and also reduce deployment failures by 70%.

Deployment Risk Assessment – Case Study

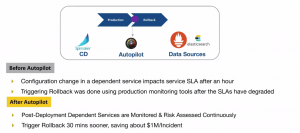

Case2: Production Assessment Case Study

In this situation, the Customer had been facing the following difficulties:

-

- Configuration change in a dependent service impacts service SLA after an hour

- Triggering Rollback was done using production monitoring tools after the SLA have degraded.

This use case is for a very large data center deployment and they are using production monitoring tools for monitoring their SLA. When a bad deployment takes place, it takes about an hour for the dependent services to get impacted. But their reactive mode of response i.e. triggering rollbacks are SLAs that have been sufficiently degraded used to cost them dearly.

Solution:

Autopilot was implemented and used to continuously monitor the newly deployed application including its dependent micro-services. Today, Autopilot is able to trigger rollbacks 30 minutes before the SLA breach, thereby saving the customer $ 1 Million per incident.

Production Risk Assessment – Case Study

Production Risk Assessment – Case Study

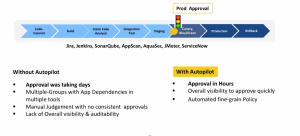

Case3: Speeding up Approval – Case Study

A large insurance company was facing the following problems with their approval process:

-

- Approval used to take multiple days (sometimes weeks)

- A complex environment with multiple groups and too many dependencies on several apps and tools

- Manual Judgment with no consistent process for approvals

- Lack of overall visibility and audibility

Solution:

Using Autopilot they could reduce the approval time to hours instead of days or weeks. Autopilot provided the overall visibility and automation for fine-grained policy compliance for quick decision making that resulted in faster approvals.

Speeding up Approval – Case Study

Conclusion

So in this blog, we have discussed how the manual processes of accumulating, data from the different systems, dependency on domain expertise, and lack of visibility for approving a release are the primary reasons that hinder the speed of software release deployment and delivery process. Even if companies use the state of art technologies to implement continuous delivery, it may not be sufficient alone. While attaining scale in software delivery, an enterprise would need a smart and intelligent tool that could talk to different CI/CD tools in their ecosystem, gather data, use machine intelligence to calculate risks, and take automated decisions to progress the release to the next stage of the deployment process. If you are facing any problems in CI/CD, then take a look at our existing continuous verification solution, Autopilot. If you have any queries please reach out to us.

If you want to know more about the Autopilot or request a demonstration, please book a meeting with us for Autopilot Demo.

OpsMx is a leading provider of Continuous Delivery solutions that help enterprises safely deliver software at scale and without any human intervention. We help engineering teams take the risk and manual effort out of releasing innovations at the speed of modern business. For additional information, contact OpsMx Support.