Why you need to Scale Beyond Jenkins to Unlock Speed and Stability

Jenkins is the stepping stone for any organization looking to implement a CICD pipeline and move away from a legacy software delivery process. However, Jenkins started as a tool to reduce errors caused due to build issues and code failures. This tool later formed the center of a framework called automated continuous integration that checked for code failures before it was committed for deployment. Therefore, we can term Jenkins as an automation tool used by software development teams looking to bring continuous integration into their delivery process. It was initially built on Java and was targeted towards Java projects. Then it further expanded to support multiple languages.

Over the years, plugins have enabled Jenkins to automate deployment and testing as well. It allows developers to make changes into production by implementing a very rudimentary form of continuous delivery. We love Jenkins, but Jenkins just doesn’t perform well when organizations want a scalable and efficient continuous delivery process. Continuous delivery piggybacking on Jenkins extended plugin ecosystem brought in a lot of inefficiencies and deployment issues. To reshape the continuous delivery process, we must revise these old delivery practices associated with Jenkins by using modern continuous delivery platforms.

Real-World issues found on a Jenkins enabled CD process:

Jenkins is primarily a JAVA application, and it needs to run on a Java virtual platform. Of course, Java technology powers a lot of things that we touch and use. Still, most Java platforms become slow and unresponsive when operated in large-scale projects compared to ones written with modern languages.

We will expand these real-world issues that organizations face while using Jenkins as a CD tool. These issues will highlight pitfalls, help us develop our perspective, and realize where we have been losing out.

1. An Inefficient garbage collection and cleaning process

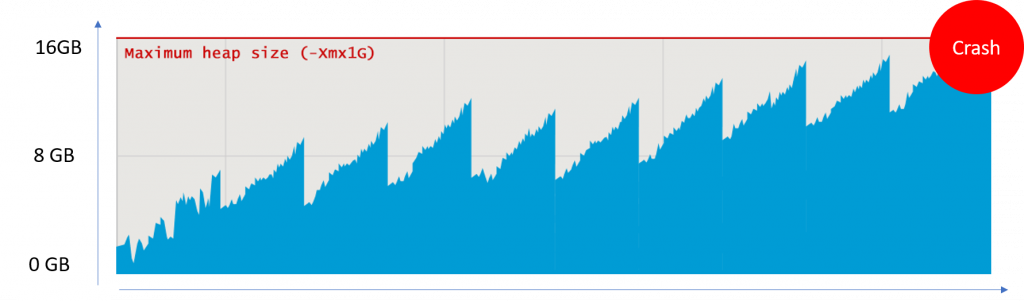

Jenkins Garbage collection process works on a simple algorithm that runs in three simple stages- Mark, Sweep and Defragment. The Garbage collector first performs a Marking step where it classifies objects into two classes- reachable and unreachable files. After the marking stage is complete, it moves to the next stage of sweeping, otherwise known as cleaning. In this stage, the ones marked as unreachable get deleted, whereas the ones that remain will be defragmented.

This outdated algorithm has limited capacity to scale. It will crash at some point in time, as shown in the graph above. To overcome this issue, we have to deploy other third-party plugins to deal with memory leak issues. Though an increased garbage collection size helps, is not a viable long-term solution. A large garbage collection size means that the time the garbage collector will take to process data will also increase, bringing the whole pipeline to a standstill where everyone is waiting for the garbage collection process to finish. We end up calling for a manual intervention every time the garbage level reaches a critical limit. A standard 16 GB practice is not a scalable approach and future proof.

2. Plugin Nightmares

Plugins are a great way to enhance product features. If you are familiar with WordPress you will agree that plugins enhance the CDN capability. Though a plugin can facilitate an ecommerce platform, it is not suited for running a large store. For that, we have tools like Magento. In the same way, Jenkins works with a lot of different plugins that add features to the core Jenkins functionality. Developers can activate these plugins when needed and dismiss them when not required. It saves resources, making it very helpful and versatile. However plugins are only meant to extend features beyond the core function of a product. If we try to add core functions with the help of plugins, we will end up obstructing the entire system.

In reality, a lot of basic tasks need the help of plugins to accomplish it. For example, pulling code from GitHub requires a plugin. For such a basic task, one should not be configuring plugins. This has to be a core function.

Today the Docker environment is very common. Any delivery platform must have it as a core feature and not as a plugin. Jenkins boasts of over 1000s different plugins, but sensibly managing tens of plugins for simple pipelines can become inconvenient. It doesn’t seem wise to implement Jenkins ecosystem when you want to manage 1000s of pipelines across multiple microservices. Managing a checklist of these plugins and configuring them will take away a lot of valuable time off from expert resources, who are better utilized focusing on improving software.

Ultimately, not all plugins are authorized for enterprise use. Many belong to third party owners and using such plugins becomes a huge liability for any organization. Hence choosing a platform where these plugins are available as core functionality will ensure that the software delivery pipeline is stable and credible.

3. Scripted Backups and Rollbacks for Deployment

Jenkins platform doesn’t come with a pre-defined rollback and backup strategy for deployment failure scenarios. It is one arduous task to configure and maintain the mix set of plugins that only enables a rudimentary form of delivery system. The backups will have to be managed with the help of separate plugins. For carrying out an advanced deployment strategy one has to find a new plugin that is compatible with the others. The custom scripts used for deployment to Kubernetes, AWS VMs need maintenance and continuous monitoring, which is very resource intensive.

Jenkins job ends with a deployment trigger. This create a complete blind spot for SREs where they aren’t aware of what is happening real-time. Whereas, Spinnaker goes many steps beyond simply triggering a deployment.

When organizations are looking to deliver fast and frequent releases, this plugin ecosystem creates instability in a CD pipeline. so SREs must ensure that a dedicated CD platform is implemented.

4. Docker compatibility

We must understand that CI framework is a much older technology than the Docker and Kubernetes. The integration process worked on standalone monolithic servers. Vanilla Jenkins CI doesn’t allow developers to take full advantage of a modern infrastructure setup. We need multiple plugins and scripts to deploy into a modern docker environment.

An extensive list of plugins that we need to set up before we can use Jenkins for Docker:

ant, antisamy-markup-formatter, build-timeout, cloudbees-folder, configuration-as-code, credentials-binding, email-ext, git, github-branch-source, gradle, ldap, mailer, matrix-auth, pam-auth, pipeline-github-lib, pipeline-stage-view, ssh-slaves, timestamper, workflow-aggregator, ws-cleanup

When organizations are moving away from VMs and standalone servers, towards a docker native infrastructure Jenkins capability to meet the new set of requirements is very limited.

5. Microservice Compatibility

They built Jenkins in a pre-Docker Era. The concept of micro-kernels was not mainstream during that time. But, introducing Kubernetes made it very easy to implement and manage microservices. This let developers flock away from older infrastructure architecture to a microservices model. But Jenkins lacks the support for integrating and testing multiple services at once. This essential functionality required for a microservices environment is missing..

If an organization is looking for the next generation microservices enabled based software delivery platform Jenkins will not shine. It will be like using outdated machinery to cut steel whereas competitors are using laser guided tools.

6. Continuous Integration is not continuous delivery

CI is the process of merging code from all developers to one central branch of a repository many times a day, while trying to avoid conflicts in the code. Whereas, CD is a methodology that enables development teams to deploy changes such as additional features, configuration, bug fixes, and experiments into production safely and quickly sustainably.

CI and CD are two very different things and we should not confuse one with other. Technically, a software delivery life-cycle needs to have both CI CD working together.CD will play a much larger role in the Software delivery pipeline. CD enables release automation while working with Jenkins in tandem to ensure code compatibility. Read more on CICD in our blog : CICD Pipeline

No success until we adopt a CD culture

There is no hard and fast rule to build a flexible, scalable and stable software delivery ecosystem. The problem of trying to replicate success stories of companies like Google and Netflix may not work for everyone. We will miss out on a lot of opportunities ahead of us if we Address software delivery through the lens of a Jenkins mindset.

People need to instill the CD practice among teams. New technology must pave the way towards success. A big factor in the innovation and scalability of an organisation depends on the Cd culture adoption. A CD inclined culture will influence direction and success.

At OpsMx we help organizations achieve their desired delivery framework that will allow them to meet their customer demands fast and stay up to date with technology. Organizations need to reassess their strategy to achieve a successful software delivery framework. OpsMx’s hassle free 24/7 support absolves organizations from worrying about maintaining a pipeline and yielding more time to focus on software development.

How do we scale beyond Jenkins?

Let us look beyond Jenkins and discover what new technology offers. Deployment methods have become much more advanced. Large-scale applications running hundreds of microservices will be unmanageable if we were leveraging Jenkins deployment plugins. These scripts can never cope up with expanding pipelines. The architecture will not let it attain the full scale power a modern deployment process offers.

Netflix realized the limitations of Jenkins very early and developed Spinnaker to overcome the issues that allowed them to scale rapidly. We took the battle tested open source Spinnaker and have built an end to end orchestration layer for a software delivery pipeline. This layer allows us to manage and control the overall pipeline from a central location. Besides the orchestration layer, we have added another layer of intelligence that further expands control over governance, risk and verification checks. This integration of approval gates for promotion to production is non existent in a Jenkins based pipeline.

OpsMx Enterprise for Spinnaker (OES) forms an all in one platform by latching on the core features of Jenkins and releasing Jenkins systems from its peripheral plugins that anchored it down. The win-win scenario leverages Jenkins to focus on the core feature that is CI and helps orchestrate the whole pipeline. With OES you can expand the capabilities far beyond your current capacity.

We have achieved this by enabling these following features:

- Multi cloud deployments for a hassle free cloud agnostic work environment where developers can choose their choice of cloud. Our pre-defined templates make the process as simple as filling out a contact us form.

- Deployment strategies are native to Spinnaker. Yes, you heard it right. No plugins are needed. OES takes a step further by automating the whole deployment cycle and takes care of Risk, Security and Governance.

- Automated pipelines granted the flexibility to control workflows and manage cloud resources. With pre-defined templates, organizations can scale from one pipeline to hundreds in minutes. The automated pipelines can manage processes such as finding an Image/artifact, deploying updated, disabling/enabling/resizing a server, which saves a lot of time and resource costs. Resizing helps you reduce your cloud infra costs as well.

- The safeguards for deployment ensure that recent versions can be added and removed safely. From verification of a new release to one-click rollback to cluster locking for maintaining exclusivity on resources during deployment, there are a plethora of safety features native to OES.

- We did not reinvent the wheel, so it allows us to build on top of your existing Jenkins system. OES will seamlessly integrate with Jenkins to fetch artifacts and automatically bake and deploy them into multiple clouds.

- Spinnaker has a built-in stage for Jenkins which can trigger Jenkins jobs.

- Spinnaker out of the box supports multi-cloud deployments.

- Spinnaker users can manage their cloud resources from the Spinnaker dashboard (Clone, Destroy, Resize, scale-up, scale-down, etc..)

- OpsMx Enterprise for Spinnaker(OES) Compliments Jenkins (Jenkins is good with CI); OES is a CD platform.

- Visibility and approval gates are really strong and easy to use in Spinnaker (OES).

- Jenkins job ends with a deployment trigger. Whereas, Spinnaker goes many steps beyond simply triggering a deployment (ex: verification). Jenkins wouldn’t know the status of the deployment once the pipeline is terminated. In Spinnaker, we can visualize the pipeline at the application & teams level.

- Jenkins – Lack of visibility around the status of deployment (Jenkins shows only success or failure of the pipeline/a deployment). Spinnaker keeps record/track of pipelines triggered in the past making traceability and auditability easier. (Spinnaker users can see the status of deployment even after months).

- Jenkins cannot create an EC2 instance or any cloud resource. Spinnaker can of the shelf bake images, create resources and deploy as part of the CD pipeline.